Note

Go to the end to download the full example code.

Processing LISN data with Pipeline API (BERT projection)¶

In this example we will process LISN (Laboratoire Interdisciplinaire des Sciences du Numérique) dataset using Pipeline API. LISN dataset contains all articles from HAL (https://hal.archives-ouvertes.fr/) published by authors from LISN between 2000-2022.

The pipeline will comprise of the following steps:

extract entities (articles, authors, teams, labs, words) from a collection of scientific articles

use Bidirectional Encoder Representations from Transformers (BERT) to generate vector representation of the entities

use Uniform Manifold Approximation and Projection (UMAP) to project those entities in 2 dimensions

use KMeans clustering to cluster entities

find their nearest neighbors.

Create LISN Dataset¶

We will first create Dataset for LISN.

The CSV file containing the data can be downloaded from https://zenodo.org/record/7323538/files/lisn_2000_2022.csv . We will use version 2.0.0 of the dataset. When we specify the URL to CSVDataset, it will download the file if it does not exist locally.

from cartodata.pipeline.datasets import CSVDataset # noqa

from pathlib import Path # noqa

ROOT_DIR = Path.cwd().parent

# The directory where files necessary to load dataset columns reside

INPUT_DIR = ROOT_DIR / "datas"

# The directory where the generated dump files will be saved

TOP_DIR = ROOT_DIR / "dumps"

dataset = CSVDataset(name="lisn", input_dir=INPUT_DIR, version="2.0.0", filename="lisn_2000_2022.csv",

fileurl="https://zenodo.org/record/7323538/files/lisn_2000_2022.csv",

columns=None, index_col=0)

This will check if the dataset file already exists locally. If it does not, it downloads the file from the specified URL and the loads the file to a pandas Dataframe.

Let’s view the dataset.

df = dataset.df

df.head(5)

The dataframe that we just read consists of 4262 articles as rows.

df.shape[0]

4262

And their authors, abstract, keywords, title, research labs and domain as columns.

print(*df.columns, sep="\n")

structId_i

authFullName_s

en_abstract_s

en_keyword_s

en_title_s

structAcronym_s

producedDateY_i

producedDateM_i

halId_s

docid

en_domainAllCodeLabel_fs

Now we should define our entities and set the column names corresponding to those entities from the data file. We have 5 entities:

———|-------------| | articles | en_title_s | | authors | authFullName_s | | teams | structAcronym_s | | labs | structAcronym_s | | words | en_abstract_s, en_title_s, en_keyword_s, en_domainAllCodeLabel_fs |

Cartolabe provides 4 types of columns:

IdentityColumn: The entity of this column represents the main entity of the dataset. The column data corresponding to the entity in the file should contain a single value and this value should be unique among column values. There can only be one IdentityColumn in the dataset.

CSColumn: The entity of this column type is related to the main entity, and can contain single or comma separated values.

CorpusColumn: The entity of this column type is the corpus related to the main entity. This can be a combination of multiple columns in the file. It uses a modified version of CountVectorizer(https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.CountVectorizer.html#sklearn.feature_extraction.text.CountVectorizer).

TfidfCorpusColumn: The entity of this column type is the corpus related to the main entity. This can be a combination of multiple columns in the file or can contain filepath from which to read the text corpus. It uses TfidfVectorizer (https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.TfidfVectorizer.html).

In this dataset, Articles is our main entity. We will define it as IdentityColumn:

from cartodata.pipeline.columns import IdentityColumn, CSColumn, CorpusColumn # noqa

articles_column = IdentityColumn(nature="articles", column_name="en_title_s")

authFullName_s column for entity authors in the dataset lists the authors who have authored each article, and has comma separated values. We will define a CSColumn:

authors_column = CSColumn(nature="authors", column_name="authFullName_s", filter_min_score=4)

Here we have set filter_min_score=4 to indicate that, while processing data, authors who have authored less than 4 articles will be filtered. When it is not set, the default value is 0, meaning that entities will not be filtered.

Teams and Labs entities both use structAcronym_s column which also has comma separated values. structAcronym_s column contains both teams and labs of the articles. For teams entity we will take only teams and for labs entity we will take only labs.

The file ../datas/inria-teams.csv contains the list of Inria teams. For teams entity, we will whitelist the values from inria-teams.csv and for labs entity, we will blacklist values from inria-teams.csv.

teams_column = CSColumn(nature="teams", column_name="structAcronym_s", whitelist="inria-teams.csv",

filter_min_score=4)

labs_column = CSColumn(nature="labs", column_name="structAcronym_s", blacklist="inria-teams.csv",

filter_min_score=4)

For words entity, we are going to use multiple columns to create a text corpus for each article:

words_column = CorpusColumn(nature="words",

column_names=["en_abstract_s", "en_title_s", "en_keyword_s", "en_domainAllCodeLabel_fs"],

stopwords="stopwords.txt", nb_grams=4, min_df=10, max_df=0.05,

min_word_length=5, normalize=True)

Now we are going to set the columns of the dataset:

dataset.set_columns([articles_column, authors_column, teams_column, labs_column, words_column])

We can set the columns in any order that we prefer. We will set the first entity as identity entity and the last entity as the corpus. If we set the entities in a different order, the Dataset will put the main entity as first.

The dataset for LISN data is ready. Now we will create and run our pipeline. For this pipeline, we will:

run LSA projection -> N-dimesional

run UMAP projection -> 2D

cluster entities

find nearest neighbors

Create and run pipeline¶

We will first create a pipeline with the dataset.

from cartodata.pipeline.common import Pipeline # noqa

pipeline = Pipeline(dataset=dataset, top_dir=TOP_DIR, input_dir=INPUT_DIR, hierarchical_dirs=True)

The workflow generates the natures from dataset columns.

pipeline.natures

['articles', 'authors', 'teams', 'labs', 'words']

Creating correspondance matrices for each entity type¶

From this table of articles, we want to extract matrices that will map the correspondance between these articles and the entities we want to use.

Pipeline has generate_entity_matrices function to generate matrices and scores for each entity (nature) specified for the dataset.

matrices, scores = pipeline.generate_entity_matrices(force=True)

The order of matrices and scores correspond to the order of dataset columns specified.

dataset.natures

['articles', 'authors', 'teams', 'labs', 'words']

Articles

The first matrix in matrices and Series in scores corresponds to articles.

The type for article column is IdentityColumn. It generates a matrix that simply maps each article to itself.

articles_mat = matrices[0]

articles_mat.shape

(4262, 4262)

Having type IdentityColumn, each article will have score 1.

articles_scores = scores[0]

articles_scores.shape

""

articles_scores.head()

Termination and Confluence of Higher-Order Rewrite Systems 1.0

Efficient Self-stabilization 1.0

Resource-bounded relational reasoning: induction and deduction through stochastic matching 1.0

Reasoning about generalized intervals : Horn representability and tractability 1.0

Proof Nets and Explicit Substitutions 1.0

dtype: float64

Authors

The second matrix in matrices and score in scores correspond to authors.

The type for authors column is CSColumn. It generates a sparce matrix where rows correspond to articles and columns corresponds to authors.

authors_mat = matrices[1]

authors_mat.shape

(4262, 694)

Here we see that after filtering authors which have less than 4 articles, there are 694 distinct authors.

The series, which we named authors_scores, contains the list of authors extracted from the column authFullName_s with a score that is equal to the number of rows (articles) that this value was mapped within the authors_mat matrix.

authors_scores = scores[1]

authors_scores.head()

Sébastien Tixeuil 47

Michèle Sebag 137

Khaldoun Al Agha 20

Ralf Treinen 5

Christine Eisenbeis 27

dtype: int64

If we look at the 4th column of the matrix, which corresponds to the author Ralf Treinen, we can see that it has 5 non-zero rows, each row indicating which articles he authored.

print(authors_mat[:, 3])

(6, 0) 1

(21, 0) 1

(37, 0) 1

(2729, 0) 1

(3573, 0) 1

Teams

The third matrix in matrices and score in scores correspond to teams.

The type for teams column is CSColumn. It generates a sparce matrix where rows correspond to articles and columns corresponds to teams.

teams_mat = matrices[2]

teams_mat.shape

(4262, 33)

Here we see that after filtering teams which have less than 4 articles, there are 33 distinct teams.

The series, which we named teams_scores, contains the list of teams extracted from the column structAcronym_s with a score that is equal to the number of rows (articles) that this value was mapped within the teams_mat matrix.

teams_scores = scores[2]

teams_scores.head()

TAO 533

Regal 10

Parkas 14

DAHU 7

GALLIUM 23

dtype: int64

Labs

The fourth matrix in matrices and score in scores correspond to labs.

The type for labs column is CSColumn. It generates a sparce matrix where rows correspond to articles and columns corresponds to labs.

labs_mat = matrices[3]

labs_mat.shape

(4262, 549)

Here we see that after filtering labs which have less than 4 articles, there are 549 distinct labs.

The series, which we named labs_scores, contains the list of labs extracted from the column structAcronym_s with a score that is equal to the number of rows (articles) that this value was mapped within the labs_mat matrix.

labs_scores = scores[3]

labs_scores.head()

LRI 4789

UP11 6271

CNRS 10217

LISN 5203

X 487

dtype: int64

Words

The fifth matrix in matrices and score in scores correspond to words.

The type for words column is CorpusColumn. It creates a corpus merging multiple text columns in the dataset, and then extracts n-grams from that corpus. Finally it generates a sparce matrix where rows correspond to articles and columns corresponds to n-grams.

words_mat = matrices[4]

words_mat.shape

(4262, 4645)

Here we see that there are 5226 distinct n-grams.

The series, which we named words_scores, contains the list of n-grams with a score that is equal to the number of rows (articles) that this value was mapped within the words_mat matrix.

words_scores = scores[4]

words_scores.head()

abilities 21

ability 164

absence 53

absolute 19

abstract 174

dtype: int64

Dimension reduction¶

One way to see the matrices that we created is as coordinates in the space of all articles. What we want to do is to reduce the dimension of this space to make it easier to work with and see.

Bert projection

We’ll start by using the Bert technique to reduce the number of rows in our data.

from cartodata.pipeline.projectionnd import BertProjection # noqa

bert_projection = BertProjection()

pipeline.set_projection_nd(bert_projection)

Now we can run Bert projection on the matrices.

matrices_nD = pipeline.do_projection_nD(force=True)

""

for nature, matrix in zip(pipeline.natures, matrices_nD):

print(f"{nature} ------------- {matrix.shape}")

Using torch device: cpu

Fetching 4 files: 0%| | 0/4 [00:00<?, ?it/s]

Fetching 4 files: 25%|██▌ | 1/4 [00:00<00:00, 3.50it/s]

Fetching 4 files: 100%|██████████| 4/4 [00:00<00:00, 9.43it/s]

Fetching 4 files: 100%|██████████| 4/4 [00:00<00:00, 8.35it/s]

/usr/local/lib/python3.9/site-packages/adapters/loading.py:165: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

state_dict = torch.load(weights_file, map_location="cpu")

Processing batches: 0%| | 0/427 [00:00<?, ?it/s]

Processing batches: 0%| | 1/427 [00:05<40:47, 5.74s/it]

Processing batches: 0%| | 2/427 [00:11<39:19, 5.55s/it]

Processing batches: 1%| | 3/427 [00:16<39:34, 5.60s/it]

Processing batches: 1%| | 4/427 [00:22<39:15, 5.57s/it]

Processing batches: 1%| | 5/427 [00:28<40:03, 5.70s/it]

Processing batches: 1%|▏ | 6/427 [00:33<39:26, 5.62s/it]

Processing batches: 2%|▏ | 7/427 [00:39<38:50, 5.55s/it]

Processing batches: 2%|▏ | 8/427 [00:44<38:35, 5.53s/it]

Processing batches: 2%|▏ | 9/427 [00:50<38:21, 5.51s/it]

Processing batches: 2%|▏ | 10/427 [00:55<38:01, 5.47s/it]

Processing batches: 3%|▎ | 11/427 [01:00<37:55, 5.47s/it]

Processing batches: 3%|▎ | 12/427 [01:06<37:59, 5.49s/it]

Processing batches: 3%|▎ | 13/427 [01:11<37:56, 5.50s/it]

Processing batches: 3%|▎ | 14/427 [01:17<37:48, 5.49s/it]

Processing batches: 4%|▎ | 15/427 [01:22<37:36, 5.48s/it]

Processing batches: 4%|▎ | 16/427 [01:28<37:45, 5.51s/it]

Processing batches: 4%|▍ | 17/427 [01:33<37:11, 5.44s/it]

Processing batches: 4%|▍ | 18/427 [01:39<36:50, 5.40s/it]

Processing batches: 4%|▍ | 19/427 [01:44<36:40, 5.39s/it]

Processing batches: 5%|▍ | 20/427 [01:49<36:44, 5.42s/it]

Processing batches: 5%|▍ | 21/427 [01:55<36:43, 5.43s/it]

Processing batches: 5%|▌ | 22/427 [02:00<36:41, 5.44s/it]

Processing batches: 5%|▌ | 23/427 [02:06<36:51, 5.47s/it]

Processing batches: 6%|▌ | 24/427 [02:11<36:40, 5.46s/it]

Processing batches: 6%|▌ | 25/427 [02:17<36:48, 5.49s/it]

Processing batches: 6%|▌ | 26/427 [02:22<36:50, 5.51s/it]

Processing batches: 6%|▋ | 27/427 [02:28<36:45, 5.51s/it]

Processing batches: 7%|▋ | 28/427 [02:34<36:41, 5.52s/it]

Processing batches: 7%|▋ | 29/427 [02:39<36:28, 5.50s/it]

Processing batches: 7%|▋ | 30/427 [02:44<36:14, 5.48s/it]

Processing batches: 7%|▋ | 31/427 [02:50<36:10, 5.48s/it]

Processing batches: 7%|▋ | 32/427 [02:55<35:59, 5.47s/it]

Processing batches: 8%|▊ | 33/427 [03:01<35:38, 5.43s/it]

Processing batches: 8%|▊ | 34/427 [03:06<35:33, 5.43s/it]

Processing batches: 8%|▊ | 35/427 [03:11<35:04, 5.37s/it]

Processing batches: 8%|▊ | 36/427 [03:17<35:10, 5.40s/it]

Processing batches: 9%|▊ | 37/427 [03:22<35:12, 5.42s/it]

Processing batches: 9%|▉ | 38/427 [03:28<35:03, 5.41s/it]

Processing batches: 9%|▉ | 39/427 [03:32<33:34, 5.19s/it]

Processing batches: 9%|▉ | 40/427 [03:38<34:00, 5.27s/it]

Processing batches: 10%|▉ | 41/427 [03:43<34:27, 5.36s/it]

Processing batches: 10%|▉ | 42/427 [03:49<34:38, 5.40s/it]

Processing batches: 10%|█ | 43/427 [03:54<34:40, 5.42s/it]

Processing batches: 10%|█ | 44/427 [04:00<34:34, 5.42s/it]

Processing batches: 11%|█ | 45/427 [04:05<34:19, 5.39s/it]

Processing batches: 11%|█ | 46/427 [04:10<33:53, 5.34s/it]

Processing batches: 11%|█ | 47/427 [04:16<33:49, 5.34s/it]

Processing batches: 11%|█ | 48/427 [04:21<33:59, 5.38s/it]

Processing batches: 11%|█▏ | 49/427 [04:27<34:05, 5.41s/it]

Processing batches: 12%|█▏ | 50/427 [04:32<34:07, 5.43s/it]

Processing batches: 12%|█▏ | 51/427 [04:37<34:03, 5.44s/it]

Processing batches: 12%|█▏ | 52/427 [04:43<34:09, 5.47s/it]

Processing batches: 12%|█▏ | 53/427 [04:49<34:18, 5.51s/it]

Processing batches: 13%|█▎ | 54/427 [04:54<34:19, 5.52s/it]

Processing batches: 13%|█▎ | 55/427 [05:00<34:18, 5.53s/it]

Processing batches: 13%|█▎ | 56/427 [05:05<34:05, 5.51s/it]

Processing batches: 13%|█▎ | 57/427 [05:11<33:55, 5.50s/it]

Processing batches: 14%|█▎ | 58/427 [05:16<33:41, 5.48s/it]

Processing batches: 14%|█▍ | 59/427 [05:22<33:47, 5.51s/it]

Processing batches: 14%|█▍ | 60/427 [05:27<33:54, 5.54s/it]

Processing batches: 14%|█▍ | 61/427 [05:33<33:51, 5.55s/it]

Processing batches: 15%|█▍ | 62/427 [05:38<33:39, 5.53s/it]

Processing batches: 15%|█▍ | 63/427 [05:44<34:09, 5.63s/it]

Processing batches: 15%|█▍ | 64/427 [05:50<33:47, 5.59s/it]

Processing batches: 15%|█▌ | 65/427 [05:55<33:28, 5.55s/it]

Processing batches: 15%|█▌ | 66/427 [06:01<33:30, 5.57s/it]

Processing batches: 16%|█▌ | 67/427 [06:06<33:23, 5.57s/it]

Processing batches: 16%|█▌ | 68/427 [06:12<33:20, 5.57s/it]

Processing batches: 16%|█▌ | 69/427 [06:17<33:03, 5.54s/it]

Processing batches: 16%|█▋ | 70/427 [06:23<32:46, 5.51s/it]

Processing batches: 17%|█▋ | 71/427 [06:28<32:46, 5.52s/it]

Processing batches: 17%|█▋ | 72/427 [06:34<32:34, 5.51s/it]

Processing batches: 17%|█▋ | 73/427 [06:38<30:47, 5.22s/it]

Processing batches: 17%|█▋ | 74/427 [06:44<31:07, 5.29s/it]

Processing batches: 18%|█▊ | 75/427 [06:49<31:14, 5.32s/it]

Processing batches: 18%|█▊ | 76/427 [06:55<31:24, 5.37s/it]

Processing batches: 18%|█▊ | 77/427 [07:00<31:15, 5.36s/it]

Processing batches: 18%|█▊ | 78/427 [07:06<31:34, 5.43s/it]

Processing batches: 19%|█▊ | 79/427 [07:11<31:38, 5.46s/it]

Processing batches: 19%|█▊ | 80/427 [07:17<31:40, 5.48s/it]

Processing batches: 19%|█▉ | 81/427 [07:22<31:42, 5.50s/it]

Processing batches: 19%|█▉ | 82/427 [07:28<31:28, 5.47s/it]

Processing batches: 19%|█▉ | 83/427 [07:32<29:40, 5.18s/it]

Processing batches: 20%|█▉ | 84/427 [07:38<30:09, 5.27s/it]

Processing batches: 20%|█▉ | 85/427 [07:43<30:23, 5.33s/it]

Processing batches: 20%|██ | 86/427 [07:49<30:32, 5.37s/it]

Processing batches: 20%|██ | 87/427 [07:54<30:35, 5.40s/it]

Processing batches: 21%|██ | 88/427 [07:59<30:29, 5.40s/it]

Processing batches: 21%|██ | 89/427 [08:05<30:44, 5.46s/it]

Processing batches: 21%|██ | 90/427 [08:11<30:48, 5.49s/it]

Processing batches: 21%|██▏ | 91/427 [08:16<30:58, 5.53s/it]

Processing batches: 22%|██▏ | 92/427 [08:22<30:49, 5.52s/it]

Processing batches: 22%|██▏ | 93/427 [08:27<30:41, 5.51s/it]

Processing batches: 22%|██▏ | 94/427 [08:33<30:46, 5.55s/it]

Processing batches: 22%|██▏ | 95/427 [08:38<30:47, 5.57s/it]

Processing batches: 22%|██▏ | 96/427 [08:44<30:31, 5.53s/it]

Processing batches: 23%|██▎ | 97/427 [08:49<30:19, 5.51s/it]

Processing batches: 23%|██▎ | 98/427 [08:55<30:15, 5.52s/it]

Processing batches: 23%|██▎ | 99/427 [09:00<30:00, 5.49s/it]

Processing batches: 23%|██▎ | 100/427 [09:06<29:39, 5.44s/it]

Processing batches: 24%|██▎ | 101/427 [09:11<29:17, 5.39s/it]

Processing batches: 24%|██▍ | 102/427 [09:16<29:16, 5.40s/it]

Processing batches: 24%|██▍ | 103/427 [09:22<29:07, 5.39s/it]

Processing batches: 24%|██▍ | 104/427 [09:27<29:14, 5.43s/it]

Processing batches: 25%|██▍ | 105/427 [09:33<29:13, 5.45s/it]

Processing batches: 25%|██▍ | 106/427 [09:38<28:39, 5.36s/it]

Processing batches: 25%|██▌ | 107/427 [09:43<28:46, 5.40s/it]

Processing batches: 25%|██▌ | 108/427 [09:49<28:48, 5.42s/it]

Processing batches: 26%|██▌ | 109/427 [09:54<28:48, 5.44s/it]

Processing batches: 26%|██▌ | 110/427 [10:00<28:52, 5.47s/it]

Processing batches: 26%|██▌ | 111/427 [10:05<28:42, 5.45s/it]

Processing batches: 26%|██▌ | 112/427 [10:10<28:12, 5.37s/it]

Processing batches: 26%|██▋ | 113/427 [10:16<28:14, 5.40s/it]

Processing batches: 27%|██▋ | 114/427 [10:21<28:12, 5.41s/it]

Processing batches: 27%|██▋ | 115/427 [10:27<28:05, 5.40s/it]

Processing batches: 27%|██▋ | 116/427 [10:32<28:16, 5.45s/it]

Processing batches: 27%|██▋ | 117/427 [10:38<28:30, 5.52s/it]

Processing batches: 28%|██▊ | 118/427 [10:44<28:27, 5.53s/it]

Processing batches: 28%|██▊ | 119/427 [10:49<28:13, 5.50s/it]

Processing batches: 28%|██▊ | 120/427 [10:54<28:08, 5.50s/it]

Processing batches: 28%|██▊ | 121/427 [11:00<28:06, 5.51s/it]

Processing batches: 29%|██▊ | 122/427 [11:05<27:55, 5.49s/it]

Processing batches: 29%|██▉ | 123/427 [11:11<27:46, 5.48s/it]

Processing batches: 29%|██▉ | 124/427 [11:16<27:07, 5.37s/it]

Processing batches: 29%|██▉ | 125/427 [11:21<27:06, 5.38s/it]

Processing batches: 30%|██▉ | 126/427 [11:27<27:10, 5.42s/it]

Processing batches: 30%|██▉ | 127/427 [11:33<27:20, 5.47s/it]

Processing batches: 30%|██▉ | 128/427 [11:38<27:13, 5.46s/it]

Processing batches: 30%|███ | 129/427 [11:44<27:18, 5.50s/it]

Processing batches: 30%|███ | 130/427 [11:49<27:03, 5.47s/it]

Processing batches: 31%|███ | 131/427 [11:54<26:55, 5.46s/it]

Processing batches: 31%|███ | 132/427 [12:00<26:49, 5.45s/it]

Processing batches: 31%|███ | 133/427 [12:05<26:47, 5.47s/it]

Processing batches: 31%|███▏ | 134/427 [12:11<26:32, 5.43s/it]

Processing batches: 32%|███▏ | 135/427 [12:16<26:42, 5.49s/it]

Processing batches: 32%|███▏ | 136/427 [12:22<26:44, 5.51s/it]

Processing batches: 32%|███▏ | 137/427 [12:27<26:32, 5.49s/it]

Processing batches: 32%|███▏ | 138/427 [12:33<26:29, 5.50s/it]

Processing batches: 33%|███▎ | 139/427 [12:38<26:17, 5.48s/it]

Processing batches: 33%|███▎ | 140/427 [12:44<26:08, 5.47s/it]

Processing batches: 33%|███▎ | 141/427 [12:49<25:55, 5.44s/it]

Processing batches: 33%|███▎ | 142/427 [12:55<25:51, 5.44s/it]

Processing batches: 33%|███▎ | 143/427 [13:00<25:47, 5.45s/it]

Processing batches: 34%|███▎ | 144/427 [13:05<25:44, 5.46s/it]

Processing batches: 34%|███▍ | 145/427 [13:11<25:45, 5.48s/it]

Processing batches: 34%|███▍ | 146/427 [13:16<25:35, 5.46s/it]

Processing batches: 34%|███▍ | 147/427 [13:22<25:29, 5.46s/it]

Processing batches: 35%|███▍ | 148/427 [13:27<25:27, 5.48s/it]

Processing batches: 35%|███▍ | 149/427 [13:33<25:30, 5.51s/it]

Processing batches: 35%|███▌ | 150/427 [13:38<25:28, 5.52s/it]

Processing batches: 35%|███▌ | 151/427 [13:44<25:12, 5.48s/it]

Processing batches: 36%|███▌ | 152/427 [13:49<25:05, 5.48s/it]

Processing batches: 36%|███▌ | 153/427 [13:55<24:58, 5.47s/it]

Processing batches: 36%|███▌ | 154/427 [14:00<24:55, 5.48s/it]

Processing batches: 36%|███▋ | 155/427 [14:06<24:50, 5.48s/it]

Processing batches: 37%|███▋ | 156/427 [14:11<24:49, 5.50s/it]

Processing batches: 37%|███▋ | 157/427 [14:17<24:45, 5.50s/it]

Processing batches: 37%|███▋ | 158/427 [14:22<24:32, 5.48s/it]

Processing batches: 37%|███▋ | 159/427 [14:28<24:29, 5.48s/it]

Processing batches: 37%|███▋ | 160/427 [14:33<24:26, 5.49s/it]

Processing batches: 38%|███▊ | 161/427 [14:39<24:20, 5.49s/it]

Processing batches: 38%|███▊ | 162/427 [14:44<24:10, 5.47s/it]

Processing batches: 38%|███▊ | 163/427 [14:50<24:11, 5.50s/it]

Processing batches: 38%|███▊ | 164/427 [14:55<24:02, 5.48s/it]

Processing batches: 39%|███▊ | 165/427 [15:01<23:59, 5.49s/it]

Processing batches: 39%|███▉ | 166/427 [15:06<23:52, 5.49s/it]

Processing batches: 39%|███▉ | 167/427 [15:12<23:35, 5.44s/it]

Processing batches: 39%|███▉ | 168/427 [15:17<23:27, 5.43s/it]

Processing batches: 40%|███▉ | 169/427 [15:22<23:20, 5.43s/it]

Processing batches: 40%|███▉ | 170/427 [15:28<23:10, 5.41s/it]

Processing batches: 40%|████ | 171/427 [15:33<23:04, 5.41s/it]

Processing batches: 40%|████ | 172/427 [15:38<22:56, 5.40s/it]

Processing batches: 41%|████ | 173/427 [15:44<22:50, 5.40s/it]

Processing batches: 41%|████ | 174/427 [15:49<22:38, 5.37s/it]

Processing batches: 41%|████ | 175/427 [15:55<22:31, 5.36s/it]

Processing batches: 41%|████ | 176/427 [16:00<22:32, 5.39s/it]

Processing batches: 41%|████▏ | 177/427 [16:05<22:27, 5.39s/it]

Processing batches: 42%|████▏ | 178/427 [16:11<22:29, 5.42s/it]

Processing batches: 42%|████▏ | 179/427 [16:15<21:25, 5.18s/it]

Processing batches: 42%|████▏ | 180/427 [16:21<21:45, 5.28s/it]

Processing batches: 42%|████▏ | 181/427 [16:26<21:52, 5.33s/it]

Processing batches: 43%|████▎ | 182/427 [16:32<21:47, 5.34s/it]

Processing batches: 43%|████▎ | 183/427 [16:37<21:44, 5.35s/it]

Processing batches: 43%|████▎ | 184/427 [16:43<21:38, 5.34s/it]

Processing batches: 43%|████▎ | 185/427 [16:48<21:36, 5.36s/it]

Processing batches: 44%|████▎ | 186/427 [16:53<21:23, 5.33s/it]

Processing batches: 44%|████▍ | 187/427 [16:58<21:18, 5.33s/it]

Processing batches: 44%|████▍ | 188/427 [17:04<21:18, 5.35s/it]

Processing batches: 44%|████▍ | 189/427 [17:09<21:06, 5.32s/it]

Processing batches: 44%|████▍ | 190/427 [17:15<21:13, 5.38s/it]

Processing batches: 45%|████▍ | 191/427 [17:20<21:08, 5.38s/it]

Processing batches: 45%|████▍ | 192/427 [17:25<20:58, 5.36s/it]

Processing batches: 45%|████▌ | 193/427 [17:31<21:00, 5.39s/it]

Processing batches: 45%|████▌ | 194/427 [17:36<20:49, 5.36s/it]

Processing batches: 46%|████▌ | 195/427 [17:42<20:46, 5.37s/it]

Processing batches: 46%|████▌ | 196/427 [17:47<20:48, 5.40s/it]

Processing batches: 46%|████▌ | 197/427 [17:52<20:41, 5.40s/it]

Processing batches: 46%|████▋ | 198/427 [17:58<20:37, 5.40s/it]

Processing batches: 47%|████▋ | 199/427 [18:03<20:30, 5.40s/it]

Processing batches: 47%|████▋ | 200/427 [18:09<20:25, 5.40s/it]

Processing batches: 47%|████▋ | 201/427 [18:14<20:27, 5.43s/it]

Processing batches: 47%|████▋ | 202/427 [18:20<20:25, 5.45s/it]

Processing batches: 48%|████▊ | 203/427 [18:25<20:24, 5.47s/it]

Processing batches: 48%|████▊ | 204/427 [18:30<20:13, 5.44s/it]

Processing batches: 48%|████▊ | 205/427 [18:36<20:00, 5.41s/it]

Processing batches: 48%|████▊ | 206/427 [18:41<19:54, 5.41s/it]

Processing batches: 48%|████▊ | 207/427 [18:47<19:54, 5.43s/it]

Processing batches: 49%|████▊ | 208/427 [18:52<19:47, 5.42s/it]

Processing batches: 49%|████▉ | 209/427 [18:58<19:45, 5.44s/it]

Processing batches: 49%|████▉ | 210/427 [19:03<19:43, 5.45s/it]

Processing batches: 49%|████▉ | 211/427 [19:08<19:24, 5.39s/it]

Processing batches: 50%|████▉ | 212/427 [19:14<19:12, 5.36s/it]

Processing batches: 50%|████▉ | 213/427 [19:19<19:09, 5.37s/it]

Processing batches: 50%|█████ | 214/427 [19:24<18:57, 5.34s/it]

Processing batches: 50%|█████ | 215/427 [19:30<18:53, 5.35s/it]

Processing batches: 51%|█████ | 216/427 [19:34<17:42, 5.03s/it]

Processing batches: 51%|█████ | 217/427 [19:39<17:28, 4.99s/it]

Processing batches: 51%|█████ | 218/427 [19:44<17:48, 5.11s/it]

Processing batches: 51%|█████▏ | 219/427 [19:50<18:16, 5.27s/it]

Processing batches: 52%|█████▏ | 220/427 [19:55<18:20, 5.32s/it]

Processing batches: 52%|█████▏ | 221/427 [20:01<18:27, 5.38s/it]

Processing batches: 52%|█████▏ | 222/427 [20:06<18:35, 5.44s/it]

Processing batches: 52%|█████▏ | 223/427 [20:12<18:46, 5.52s/it]

Processing batches: 52%|█████▏ | 224/427 [20:18<18:42, 5.53s/it]

Processing batches: 53%|█████▎ | 225/427 [20:23<18:32, 5.51s/it]

Processing batches: 53%|█████▎ | 226/427 [20:29<18:35, 5.55s/it]

Processing batches: 53%|█████▎ | 227/427 [20:34<18:33, 5.57s/it]

Processing batches: 53%|█████▎ | 228/427 [20:40<18:26, 5.56s/it]

Processing batches: 54%|█████▎ | 229/427 [20:45<18:20, 5.56s/it]

Processing batches: 54%|█████▍ | 230/427 [20:51<18:12, 5.55s/it]

Processing batches: 54%|█████▍ | 231/427 [20:56<17:58, 5.50s/it]

Processing batches: 54%|█████▍ | 232/427 [21:02<17:46, 5.47s/it]

Processing batches: 55%|█████▍ | 233/427 [21:07<17:47, 5.50s/it]

Processing batches: 55%|█████▍ | 234/427 [21:13<18:02, 5.61s/it]

Processing batches: 55%|█████▌ | 235/427 [21:19<17:43, 5.54s/it]

Processing batches: 55%|█████▌ | 236/427 [21:24<17:38, 5.54s/it]

Processing batches: 56%|█████▌ | 237/427 [21:30<17:28, 5.52s/it]

Processing batches: 56%|█████▌ | 238/427 [21:35<17:14, 5.47s/it]

Processing batches: 56%|█████▌ | 239/427 [21:41<17:18, 5.53s/it]

Processing batches: 56%|█████▌ | 240/427 [21:46<17:11, 5.52s/it]

Processing batches: 56%|█████▋ | 241/427 [21:52<17:01, 5.49s/it]

Processing batches: 57%|█████▋ | 242/427 [21:57<17:01, 5.52s/it]

Processing batches: 57%|█████▋ | 243/427 [22:03<16:53, 5.51s/it]

Processing batches: 57%|█████▋ | 244/427 [22:08<16:43, 5.48s/it]

Processing batches: 57%|█████▋ | 245/427 [22:14<16:45, 5.52s/it]

Processing batches: 58%|█████▊ | 246/427 [22:19<16:38, 5.52s/it]

Processing batches: 58%|█████▊ | 247/427 [22:24<15:41, 5.23s/it]

Processing batches: 58%|█████▊ | 248/427 [22:29<15:50, 5.31s/it]

Processing batches: 58%|█████▊ | 249/427 [22:35<15:58, 5.38s/it]

Processing batches: 59%|█████▊ | 250/427 [22:40<15:50, 5.37s/it]

Processing batches: 59%|█████▉ | 251/427 [22:46<15:54, 5.42s/it]

Processing batches: 59%|█████▉ | 252/427 [22:51<15:48, 5.42s/it]

Processing batches: 59%|█████▉ | 253/427 [22:56<15:44, 5.43s/it]

Processing batches: 59%|█████▉ | 254/427 [23:02<15:52, 5.50s/it]

Processing batches: 60%|█████▉ | 255/427 [23:08<15:44, 5.49s/it]

Processing batches: 60%|█████▉ | 256/427 [23:13<15:46, 5.53s/it]

Processing batches: 60%|██████ | 257/427 [23:19<15:48, 5.58s/it]

Processing batches: 60%|██████ | 258/427 [23:25<15:45, 5.60s/it]

Processing batches: 61%|██████ | 259/427 [23:30<15:33, 5.56s/it]

Processing batches: 61%|██████ | 260/427 [23:36<15:26, 5.55s/it]

Processing batches: 61%|██████ | 261/427 [23:41<15:27, 5.59s/it]

Processing batches: 61%|██████▏ | 262/427 [23:47<15:29, 5.63s/it]

Processing batches: 62%|██████▏ | 263/427 [23:53<15:29, 5.66s/it]

Processing batches: 62%|██████▏ | 264/427 [23:57<14:18, 5.27s/it]

Processing batches: 62%|██████▏ | 265/427 [24:03<14:28, 5.36s/it]

Processing batches: 62%|██████▏ | 266/427 [24:08<14:40, 5.47s/it]

Processing batches: 63%|██████▎ | 267/427 [24:14<14:44, 5.53s/it]

Processing batches: 63%|██████▎ | 268/427 [24:19<14:33, 5.50s/it]

Processing batches: 63%|██████▎ | 269/427 [24:25<14:26, 5.48s/it]

Processing batches: 63%|██████▎ | 270/427 [24:31<14:34, 5.57s/it]

Processing batches: 63%|██████▎ | 271/427 [24:36<14:28, 5.56s/it]

Processing batches: 64%|██████▎ | 272/427 [24:42<14:22, 5.56s/it]

Processing batches: 64%|██████▍ | 273/427 [24:47<14:14, 5.55s/it]

Processing batches: 64%|██████▍ | 274/427 [24:53<14:18, 5.61s/it]

Processing batches: 64%|██████▍ | 275/427 [24:59<14:17, 5.64s/it]

Processing batches: 65%|██████▍ | 276/427 [25:04<14:13, 5.65s/it]

Processing batches: 65%|██████▍ | 277/427 [25:10<14:02, 5.62s/it]

Processing batches: 65%|██████▌ | 278/427 [25:16<13:52, 5.59s/it]

Processing batches: 65%|██████▌ | 279/427 [25:21<13:49, 5.60s/it]

Processing batches: 66%|██████▌ | 280/427 [25:27<13:46, 5.62s/it]

Processing batches: 66%|██████▌ | 281/427 [25:32<13:14, 5.44s/it]

Processing batches: 66%|██████▌ | 282/427 [25:37<13:12, 5.46s/it]

Processing batches: 66%|██████▋ | 283/427 [25:43<13:10, 5.49s/it]

Processing batches: 67%|██████▋ | 284/427 [25:48<12:59, 5.45s/it]

Processing batches: 67%|██████▋ | 285/427 [25:54<13:13, 5.59s/it]

Processing batches: 67%|██████▋ | 286/427 [26:00<13:07, 5.58s/it]

Processing batches: 67%|██████▋ | 287/427 [26:05<12:57, 5.55s/it]

Processing batches: 67%|██████▋ | 288/427 [26:11<12:58, 5.60s/it]

Processing batches: 68%|██████▊ | 289/427 [26:16<12:43, 5.54s/it]

Processing batches: 68%|██████▊ | 290/427 [26:22<12:44, 5.58s/it]

Processing batches: 68%|██████▊ | 291/427 [26:28<12:37, 5.57s/it]

Processing batches: 68%|██████▊ | 292/427 [26:33<12:35, 5.60s/it]

Processing batches: 69%|██████▊ | 293/427 [26:39<12:25, 5.56s/it]

Processing batches: 69%|██████▉ | 294/427 [26:44<12:16, 5.54s/it]

Processing batches: 69%|██████▉ | 295/427 [26:50<12:11, 5.54s/it]

Processing batches: 69%|██████▉ | 296/427 [26:55<12:01, 5.51s/it]

Processing batches: 70%|██████▉ | 297/427 [27:01<11:59, 5.53s/it]

Processing batches: 70%|██████▉ | 298/427 [27:06<11:49, 5.50s/it]

Processing batches: 70%|███████ | 299/427 [27:12<11:54, 5.58s/it]

Processing batches: 70%|███████ | 300/427 [27:18<11:50, 5.59s/it]

Processing batches: 70%|███████ | 301/427 [27:23<11:40, 5.56s/it]

Processing batches: 71%|███████ | 302/427 [27:29<11:36, 5.57s/it]

Processing batches: 71%|███████ | 303/427 [27:34<11:30, 5.57s/it]

Processing batches: 71%|███████ | 304/427 [27:40<11:28, 5.60s/it]

Processing batches: 71%|███████▏ | 305/427 [27:45<11:19, 5.57s/it]

Processing batches: 72%|███████▏ | 306/427 [27:51<11:14, 5.57s/it]

Processing batches: 72%|███████▏ | 307/427 [27:57<11:10, 5.59s/it]

Processing batches: 72%|███████▏ | 308/427 [28:02<11:08, 5.62s/it]

Processing batches: 72%|███████▏ | 309/427 [28:08<10:56, 5.57s/it]

Processing batches: 73%|███████▎ | 310/427 [28:13<10:50, 5.56s/it]

Processing batches: 73%|███████▎ | 311/427 [28:19<10:51, 5.62s/it]

Processing batches: 73%|███████▎ | 312/427 [28:25<10:46, 5.62s/it]

Processing batches: 73%|███████▎ | 313/427 [28:30<10:38, 5.60s/it]

Processing batches: 74%|███████▎ | 314/427 [28:36<10:26, 5.54s/it]

Processing batches: 74%|███████▍ | 315/427 [28:41<10:19, 5.53s/it]

Processing batches: 74%|███████▍ | 316/427 [28:47<10:16, 5.55s/it]

Processing batches: 74%|███████▍ | 317/427 [28:52<10:08, 5.53s/it]

Processing batches: 74%|███████▍ | 318/427 [28:58<09:57, 5.48s/it]

Processing batches: 75%|███████▍ | 319/427 [29:03<09:55, 5.52s/it]

Processing batches: 75%|███████▍ | 320/427 [29:08<09:43, 5.45s/it]

Processing batches: 75%|███████▌ | 321/427 [29:14<09:41, 5.49s/it]

Processing batches: 75%|███████▌ | 322/427 [29:19<09:29, 5.42s/it]

Processing batches: 76%|███████▌ | 323/427 [29:25<09:32, 5.50s/it]

Processing batches: 76%|███████▌ | 324/427 [29:31<09:29, 5.53s/it]

Processing batches: 76%|███████▌ | 325/427 [29:36<09:24, 5.54s/it]

Processing batches: 76%|███████▋ | 326/427 [29:42<09:14, 5.49s/it]

Processing batches: 77%|███████▋ | 327/427 [29:47<09:15, 5.56s/it]

Processing batches: 77%|███████▋ | 328/427 [29:53<09:14, 5.60s/it]

Processing batches: 77%|███████▋ | 329/427 [29:58<09:06, 5.58s/it]

Processing batches: 77%|███████▋ | 330/427 [30:04<09:05, 5.63s/it]

Processing batches: 78%|███████▊ | 331/427 [30:10<08:52, 5.55s/it]

Processing batches: 78%|███████▊ | 332/427 [30:15<08:43, 5.51s/it]

Processing batches: 78%|███████▊ | 333/427 [30:20<08:33, 5.46s/it]

Processing batches: 78%|███████▊ | 334/427 [30:26<08:27, 5.46s/it]

Processing batches: 78%|███████▊ | 335/427 [30:31<08:20, 5.44s/it]

Processing batches: 79%|███████▊ | 336/427 [30:37<08:21, 5.51s/it]

Processing batches: 79%|███████▉ | 337/427 [30:42<08:14, 5.49s/it]

Processing batches: 79%|███████▉ | 338/427 [30:48<08:08, 5.48s/it]

Processing batches: 79%|███████▉ | 339/427 [30:54<08:10, 5.57s/it]

Processing batches: 80%|███████▉ | 340/427 [30:59<07:57, 5.48s/it]

Processing batches: 80%|███████▉ | 341/427 [31:04<07:50, 5.47s/it]

Processing batches: 80%|████████ | 342/427 [31:10<07:47, 5.50s/it]

Processing batches: 80%|████████ | 343/427 [31:15<07:42, 5.51s/it]

Processing batches: 81%|████████ | 344/427 [31:21<07:35, 5.49s/it]

Processing batches: 81%|████████ | 345/427 [31:26<07:32, 5.52s/it]

Processing batches: 81%|████████ | 346/427 [31:32<07:23, 5.48s/it]

Processing batches: 81%|████████▏ | 347/427 [31:37<07:16, 5.45s/it]

Processing batches: 81%|████████▏ | 348/427 [31:43<07:11, 5.46s/it]

Processing batches: 82%|████████▏ | 349/427 [31:48<07:03, 5.44s/it]

Processing batches: 82%|████████▏ | 350/427 [31:54<07:02, 5.48s/it]

Processing batches: 82%|████████▏ | 351/427 [31:59<06:53, 5.44s/it]

Processing batches: 82%|████████▏ | 352/427 [32:05<06:50, 5.48s/it]

Processing batches: 83%|████████▎ | 353/427 [32:10<06:45, 5.47s/it]

Processing batches: 83%|████████▎ | 354/427 [32:16<06:40, 5.48s/it]

Processing batches: 83%|████████▎ | 355/427 [32:21<06:31, 5.43s/it]

Processing batches: 83%|████████▎ | 356/427 [32:27<06:30, 5.50s/it]

Processing batches: 84%|████████▎ | 357/427 [32:32<06:28, 5.54s/it]

Processing batches: 84%|████████▍ | 358/427 [32:38<06:22, 5.55s/it]

Processing batches: 84%|████████▍ | 359/427 [32:43<06:19, 5.59s/it]

Processing batches: 84%|████████▍ | 360/427 [32:49<06:13, 5.58s/it]

Processing batches: 85%|████████▍ | 361/427 [32:54<06:06, 5.56s/it]

Processing batches: 85%|████████▍ | 362/427 [33:00<06:00, 5.55s/it]

Processing batches: 85%|████████▌ | 363/427 [33:06<05:55, 5.56s/it]

Processing batches: 85%|████████▌ | 364/427 [33:11<05:50, 5.57s/it]

Processing batches: 85%|████████▌ | 365/427 [33:17<05:41, 5.51s/it]

Processing batches: 86%|████████▌ | 366/427 [33:22<05:38, 5.55s/it]

Processing batches: 86%|████████▌ | 367/427 [33:28<05:35, 5.58s/it]

Processing batches: 86%|████████▌ | 368/427 [33:33<05:26, 5.53s/it]

Processing batches: 86%|████████▋ | 369/427 [33:39<05:22, 5.57s/it]

Processing batches: 87%|████████▋ | 370/427 [33:45<05:18, 5.59s/it]

Processing batches: 87%|████████▋ | 371/427 [33:50<05:10, 5.54s/it]

Processing batches: 87%|████████▋ | 372/427 [33:56<05:08, 5.60s/it]

Processing batches: 87%|████████▋ | 373/427 [34:01<05:00, 5.56s/it]

Processing batches: 88%|████████▊ | 374/427 [34:07<04:56, 5.60s/it]

Processing batches: 88%|████████▊ | 375/427 [34:12<04:49, 5.57s/it]

Processing batches: 88%|████████▊ | 376/427 [34:18<04:41, 5.52s/it]

Processing batches: 88%|████████▊ | 377/427 [34:23<04:34, 5.48s/it]

Processing batches: 89%|████████▊ | 378/427 [34:29<04:29, 5.51s/it]

Processing batches: 89%|████████▉ | 379/427 [34:34<04:24, 5.52s/it]

Processing batches: 89%|████████▉ | 380/427 [34:40<04:19, 5.53s/it]

Processing batches: 89%|████████▉ | 381/427 [34:45<04:14, 5.54s/it]

Processing batches: 89%|████████▉ | 382/427 [34:51<04:10, 5.56s/it]

Processing batches: 90%|████████▉ | 383/427 [34:56<04:03, 5.54s/it]

Processing batches: 90%|████████▉ | 384/427 [35:02<03:57, 5.53s/it]

Processing batches: 90%|█████████ | 385/427 [35:07<03:50, 5.49s/it]

Processing batches: 90%|█████████ | 386/427 [35:13<03:43, 5.46s/it]

Processing batches: 91%|█████████ | 387/427 [35:18<03:36, 5.41s/it]

Processing batches: 91%|█████████ | 388/427 [35:24<03:31, 5.42s/it]

Processing batches: 91%|█████████ | 389/427 [35:29<03:27, 5.46s/it]

Processing batches: 91%|█████████▏| 390/427 [35:35<03:21, 5.46s/it]

Processing batches: 92%|█████████▏| 391/427 [35:40<03:16, 5.44s/it]

Processing batches: 92%|█████████▏| 392/427 [35:45<03:09, 5.41s/it]

Processing batches: 92%|█████████▏| 393/427 [35:50<02:57, 5.21s/it]

Processing batches: 92%|█████████▏| 394/427 [35:55<02:53, 5.27s/it]

Processing batches: 93%|█████████▎| 395/427 [36:01<02:50, 5.33s/it]

Processing batches: 93%|█████████▎| 396/427 [36:06<02:45, 5.33s/it]

Processing batches: 93%|█████████▎| 397/427 [36:12<02:40, 5.34s/it]

Processing batches: 93%|█████████▎| 398/427 [36:17<02:35, 5.37s/it]

Processing batches: 93%|█████████▎| 399/427 [36:22<02:30, 5.39s/it]

Processing batches: 94%|█████████▎| 400/427 [36:28<02:27, 5.47s/it]

Processing batches: 94%|█████████▍| 401/427 [36:34<02:23, 5.51s/it]

Processing batches: 94%|█████████▍| 402/427 [36:39<02:17, 5.49s/it]

Processing batches: 94%|█████████▍| 403/427 [36:44<02:10, 5.45s/it]

Processing batches: 95%|█████████▍| 404/427 [36:50<02:05, 5.46s/it]

Processing batches: 95%|█████████▍| 405/427 [36:56<02:00, 5.48s/it]

Processing batches: 95%|█████████▌| 406/427 [37:01<01:54, 5.45s/it]

Processing batches: 95%|█████████▌| 407/427 [37:06<01:48, 5.43s/it]

Processing batches: 96%|█████████▌| 408/427 [37:12<01:42, 5.41s/it]

Processing batches: 96%|█████████▌| 409/427 [37:17<01:38, 5.47s/it]

Processing batches: 96%|█████████▌| 410/427 [37:23<01:32, 5.44s/it]

Processing batches: 96%|█████████▋| 411/427 [37:28<01:26, 5.43s/it]

Processing batches: 96%|█████████▋| 412/427 [37:33<01:21, 5.43s/it]

Processing batches: 97%|█████████▋| 413/427 [37:39<01:16, 5.48s/it]

Processing batches: 97%|█████████▋| 414/427 [37:44<01:10, 5.45s/it]

Processing batches: 97%|█████████▋| 415/427 [37:50<01:05, 5.49s/it]

Processing batches: 97%|█████████▋| 416/427 [37:56<01:01, 5.55s/it]

Processing batches: 98%|█████████▊| 417/427 [38:01<00:55, 5.50s/it]

Processing batches: 98%|█████████▊| 418/427 [38:06<00:49, 5.46s/it]

Processing batches: 98%|█████████▊| 419/427 [38:12<00:43, 5.43s/it]

Processing batches: 98%|█████████▊| 420/427 [38:17<00:37, 5.40s/it]

Processing batches: 99%|█████████▊| 421/427 [38:23<00:32, 5.42s/it]

Processing batches: 99%|█████████▉| 422/427 [38:28<00:27, 5.44s/it]

Processing batches: 99%|█████████▉| 423/427 [38:34<00:22, 5.51s/it]

Processing batches: 99%|█████████▉| 424/427 [38:39<00:16, 5.46s/it]

Processing batches: 100%|█████████▉| 425/427 [38:44<00:10, 5.42s/it]

Processing batches: 100%|█████████▉| 426/427 [38:50<00:05, 5.43s/it]

Processing batches: 100%|██████████| 427/427 [38:51<00:00, 4.08s/it]

Processing batches: 100%|██████████| 427/427 [38:51<00:00, 5.46s/it]

articles ------------- (768, 4262)

authors ------------- (768, 694)

teams ------------- (768, 33)

labs ------------- (768, 549)

words ------------- (768, 4645)

We have 768 rows for each entity.

We will project them on a 2D space to be able to map them.

UMAP projection

The UMAP (Uniform Manifold Approximation and Projection) is a dimension reduction technique that can be used for visualisation similarly to t-SNE.

We use this algorithm to project our matrices in 2 dimensions.

from cartodata.pipeline.projection2d import UMAPProjection # noqa

umap_projection = UMAPProjection(n_neighbors=15, min_dist=0.1)

pipeline.set_projection_2d(umap_projection)

Now we can run UMAP projection on the LSA matrices.

matrices_2D = pipeline.do_projection_2D(force=True)

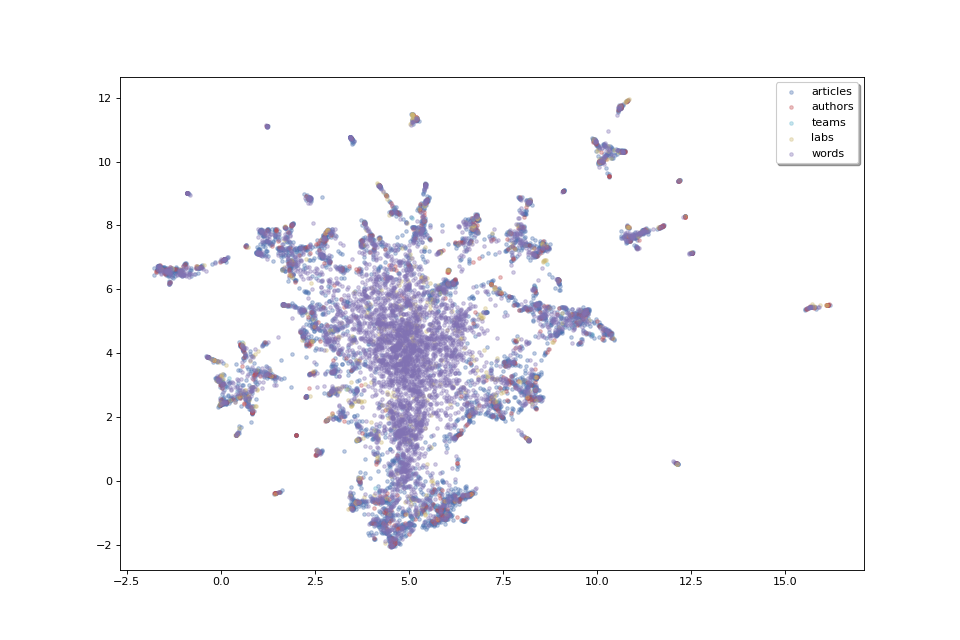

Now that we have 2D coordinates for our points, we can try to plot them to get a feel of the data’s shape.

labels = tuple(pipeline.natures)

colors = ['b', 'r', 'c', 'y', 'm']

fig, ax = pipeline.plot_map(matrices_2D, labels, colors)

The plot above, as we don’t have labels for the points, doesn’t make much sense as is. But we can see that the data shows some clusters which we could try to identify.

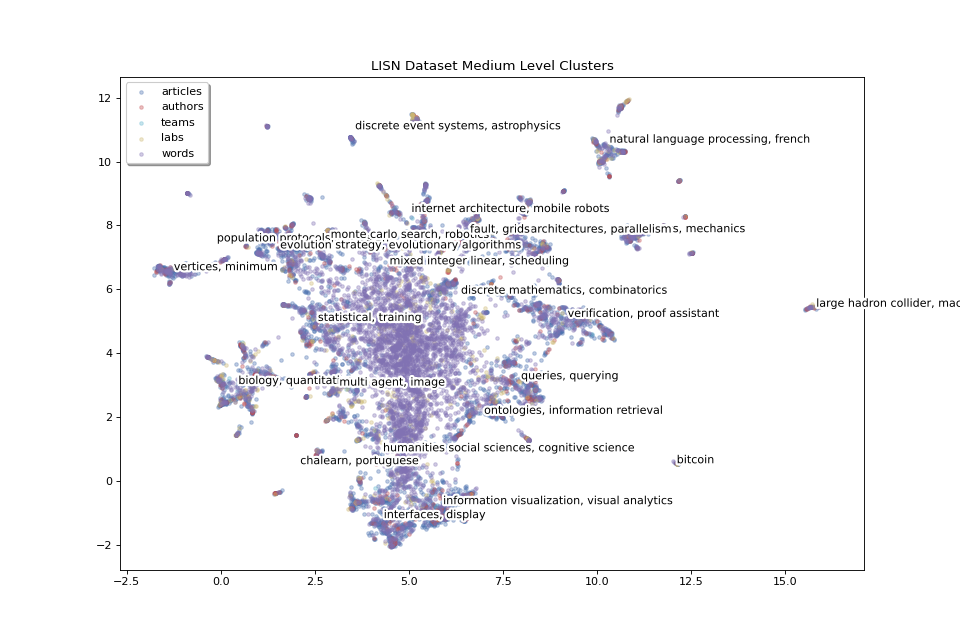

Clustering¶

In order to identify clusters, we use the KMeans clustering technique on the articles. We’ll also try to label these clusters by selecting the most frequent words that appear in each cluster’s articles.

from cartodata.pipeline.clustering import KMeansClustering # noqa

# level of clusters, hl: high level, ml: medium level

cluster_natures = ["hl_clusters", "ml_clusters"]

kmeans_clustering = KMeansClustering(n=8, base_factor=3, natures=cluster_natures)

pipeline.set_clustering(kmeans_clustering)

Now we can run clustering on the matrices.

(clus_nD, clus_2D, clus_scores, cluster_labels,

cluster_eval_pos, cluster_eval_neg) = pipeline.do_clustering()

As we have specified two levels of clustering, the returned lists wil have two values.

len(clus_2D)

2

We will now display two levels of clusters in separate plots, we will start with high level clusters:

clus_scores_hl = clus_scores[0]

clus_mat_hl = clus_2D[0]

fig_hl, ax_hl = pipeline.plot_map(matrices_2D, labels, colors,

title="LISN Dataset High Level Clusters",

annotations=clus_scores_hl.index, annotation_mat=clus_mat_hl)

The 8 high level clusters that we created give us a general idea of what the big clusters of data contain.

With medium level clusters we have a finer level of detail:

clus_scores_ml = clus_scores[1]

clus_mat_ml = clus_2D[1]

fig_ml, ax_ml = pipeline.plot_map(matrices_2D, labels, colors,

title="LISN Dataset Medium Level Clusters",

annotations=clus_scores_ml.index, annotation_mat=clus_mat_ml,

annotation_color='black')

We have 24 medium level clusters. We can increase the number of clusters to have even finer details to zoom in and focus on smaller areas.

Now we will save the plots in the working_dir directory.

pipeline.save_plots()

""

for file in pipeline.working_dir.glob("*.png"):

print(file)

Nearest neighbors¶

One more thing which could be useful to appreciate the quality of our data would be to get each point’s nearest neighbors. If our data processing is done correctly, we expect the related articles, labs, words and authors to be located close to each other.

Finding nearest neighbors is a common task with various algorithms aiming to solve it. The find_neighbors method uses one of these algorithms to find the nearest points of all entities (articles, authors, teams, labs, words). It takes an optional weight parameter to tweak the distance calculation to select points that have a higher score but are maybe a bit farther instead of just selecting the closest neighbors.

from cartodata.pipeline.neighbors import AllNeighbors

n_neighbors = 10

weights = [0, 0.5, 0.5, 0, 0]

neighboring = AllNeighbors(n_neighbors=n_neighbors, power_scores=weights)

pipeline.set_neighboring(neighboring)

pipeline.find_neighbors()

Export file using exporter¶

We can now export the data. To export the data, we need to configure the exporter.

The exported data will be the points extracted from the dataset corresponding to the entities that we have defined.

In the export file, we will have the following columns for each point:

———|-------------| | nature | one of articles, authors, teams, labs, words | | label | point’s label | | score | point’s score | | rank | point’s rank | | x | point’s x location on the map | | y | point’s y location on the map | | nn_articles | neighboring articles to this point | | nn_teams | neighboring teams to this point | | nn_labs | neighboring labs to this point | | nn_words | neighboring words to this point |

we will call pipeline.export function. It will create export.feather file and save under pipeline.working_dir.

pipeline.export()

Let’s display the contents of the file.

import pandas as pd # noqa

df = pd.read_feather(pipeline.get_clus_dir() / "export.feather")

df.head()

This is a basic export file. For each point, we can add additional columns.

For example, for each author, we can add labs and teams columns to list the labs and teams that the author belongs to. We can also merge the teams and labs in one column and name it as labs. To do that we have to first create export config for the entity (nature) that we would like to modify.

from cartodata.pipeline.exporting import (

ExportNature, MetadataColumn

) # noqa

ex_author = ExportNature(key="authors",

refs=["labs", "teams"],

merge_metadata=[{"columns": ["teams", "labs"],

"as_column": "labs"}])

We can do the same for articles. Each article will have teams and labs data, and additionally author of the article. So we can set refs=[“labs”, “teams”, “authors”].

The original dataset contains a column producedDateY_i which contains the year that the article is published. We can add this data as metadata for the point but updating column name with a more clear alternative year. We can also add a function to apply to the column value. In this example we will convert column value to string.

meta_year_article = MetadataColumn(column="producedDateY_i", as_column="year",

func="x.astype(str)")

We will also add halId_s column as url and set empty string if the value does not exist:

meta_url_article = MetadataColumn(column="halId_s", as_column="url", func="x.fillna('')")

""

ex_article = ExportNature(key="articles", refs=["labs", "teams", "authors"],

merge_metadata=[{"columns": ["teams", "labs"],

"as_column": "labs"}],

add_metadata=[meta_year_article, meta_url_article])

pipeline.export(export_natures=[ex_article, ex_author])

Now we can load the new export.feather file to see the difference.

df = pd.read_feather(pipeline.get_clus_dir() / "export.feather")

df.head()

For the points of nature articles, we have additional labs, authors, year, url columns.

Let’s see the points of nature authors:

df[df["nature"] == "authors"].head()

We have values for labs field, but not for authors, year, or url field.

As we have not defined any relation for points of natures teams, labs and words, these new columns are empty for those points.

df[df["nature"] == "teams"].head()

""

df[df["nature"] == "labs"].head()

""

df[df["nature"] == "words"].head()

""

df['x'][1]

4.723880767822266

Export to json file¶

We can export the data to a json file as well.

export_json_file = pipeline.get_clus_dir() / 'lisn_workflow_lsa.json'

pipeline.exporter.export_to_json(export_json_file)

This creates the lisn_workflow_lsa.json file which contains a list of points ready to be imported into Cartolabe. Have a look at it to check that it contains everything.

import json # noqa

with open(export_json_file, 'r') as f:

data = json.load(f)

data[1]

{'nature': 'articles', 'label': 'Efficient Self-stabilization', 'score': 1.0, 'rank': 1, 'position': [4.723880767822266, 8.428196907043457], 'neighbors': {'articles': [1, 358, 536, 60, 878, 501, 1941, 371, 233, 236], 'authors': [4262, 4293, 4341, 4303, 4273, 4278, 4282, 4402, 4368, 4538], 'teams': [4956, 4964, 4974, 4963, 4957, 4977, 4960, 4969, 4958, 4961], 'labs': [5313, 5106, 5012, 5108, 5031, 5109, 5200, 5113, 5233, 5084], 'words': [9579, 9580, 6775, 7176, 6781, 7177, 8075, 9864, 7162, 9163]}, 'labs': ',4992,4991,4990,4989', 'authors': '4262', 'year': '2000', 'url': 'tel-00124843'}

Total running time of the script: (40 minutes 4.736 seconds)